Blazor and the Web MIDI API

Friday, 9pm

Yesterday, speaking to the NE:Tech user group about V-Drum Explorer, someone mentioned the Web MIDI API– a way of accessing local MIDI devices from a browser.

Now my grasp of JavaScript is tenuous at best… but that’s okay, because I can write C# using Blazor. So in theory, I could build an equivalent to V-Drum Explorer, but running entirely in the browser using WebAssembly. That means I’d never have to worry about the installer again…

Now, I don’t want to get ahead of myself here. I suspect that WPF and later MAUI are still the way forward, but this should at least prove a fun investigation. I’ve never used the Web MIDI API, and I haven’t used Blazor for a few years. This weekend I’m sure I can find a few spare hours, so let’s see how far I can get.

Just for kicks, I’m going to write up my progress in this blog post as I go, adding a timestamp periodically so we can see how long it takes to do things (admittedly whilst writing it up at the same time). I promise not to edit this post other than for clarity, typos etc – if my ideas turn out to be complete failures, such is life.

I have a goal in mind for the end of the weekend: a Blazor web app, running locally to start with (deploying it to k8s shouldn’t be too hard, but isn’t interesting at this point), which can detect my drum module and list the names of the kits on the module.

Here’s the list of steps I expect to take. We’ll see how it goes.

- Use JSFiddle to try to access the Web MIDI API. If I can list the ports, open an input and output port, listen for MIDI messages (dumped to the console), and send a SysEx message hard-coded to request the name for kit 1.

- Start a new Blazor project, and check I can get it to work.

- Try to access the MIDI ports in Blazor – just listing the ports to start with.

- Expand the MIDI access test to do everything from step 1.

- Loop over all the kits instead of just the first one – this will involve doing checksum computation in the app, copying code from the V-Drum Explorer project. If I get this far, I’ll be very happy.

- As a bonus step, if I get this far, it would be really interesting to try to depend on V-Drum Explorer projects (VDrumExplorer.Model and VDrumExplorer.Midi) after modifying the MIDI project to use Web MIDI. At that point, the code for the Blazor app could be really quite simple… and displaying a read-only tree view probably wouldn’t be too hard. Maybe.

Sounds like I have a fun weekend ahead of me.

Saturday morning

Step 1: JSFiddle + MIDI

Time: 07:08

Turn on the TD-27, bring up the MIDI API docs and JSFiddle, and let’s give it a whirl…

It strikes me that it might be useful to be able to save some efforts here. A JSFiddle account may not be necessary for that, but it may make things easier… let’s create an account.

First problem: I can’t see how to make the console (which is where I expect all the results to end up) into more than a single line in the bottom right hand corner. I could open up Chrome’s console, of course, but as JSFiddle has one, it would be nice to use that. Let’s see what happens if I just write to it anyway… ah, it expands as it has data. Okay, that’ll do.

Test 1: initialize MIDI at all

The MIDI API docs have a really handy set of examples which I can just copy/paste. (I’m finding it hard to resist the temptation to change the whitespace to something I’m more comfortable with, but hey…)

So, copy the example in 9.1:

“Failed to get MIDI access – SecurityError: Failed to execute ‘requestMIDIAccess’ on ‘Navigator’: Midi has been disabled in this document by Feature Policy.”

Darn. Look up Feature-Policy on MDN, then a search for “JSFiddle Feature-Policy” finds https://github.com/jsfiddle/jsfiddle-issues/issues/1106 – which is specifically about MIDI access! And it has a workaround… apparently things work slightly differently with a saved Fiddle. Let’s try saving and reloading…

"MIDI ready!"

Hurray!

Test 2: list the MIDI ports

Copy/paste example 9.3 into the Fiddle (with a couple of extra lines to differentiate between input and output), and call listInputsAndOuptuts from onMIDISuccess…

"MIDI ready!" "Input ports" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Input port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output ports" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'" "Output port [type:'undefined'] id:'undefined' manufacturer:'undefined' name:'undefined' version:'undefined'"

Hmm. That’s not ideal. It’s clearly found some ports (six inputs and outputs? I’d only expect one or two), but it can’t use any properties in them.

If I add console.log(output) in the loop, it shows “entries”, “keys”, “values”, “forEach”, “has” and “get”, suggesting that the example is iterating over the properties of a collection rather than the entries.

Using for (var input in midiAccess.inputs.values()) still doesn’t give me anything obviously useful. (Keep in mind I know very little JavaScript – I’m sure the answer is obvious to many of you.)

Let’s try using forEach instead like this:

function listInputsAndOutputs( midiAccess ) {

console.log("Input ports");

midiAccess.inputs.forEach(input => {

console.log( "Input port [type:'" + input.type + "'] id:'" + input.id +

"' manufacturer:'" + input.manufacturer + "' name:'" + input.name +

"' version:'" + input.version + "'" );

});

console.log("Output ports");

midiAccess.outputs.forEach(output => {

console.log( "Output port [type:'" + output.type + "'] id:'" + output.id +

"' manufacturer:'" + output.manufacturer + "' name:'" + output.name +

"' version:'" + output.version + "'" );

});

}

Now the output is much more promising:

"MIDI ready!" "Input ports" "Input port [type:'input'] id:'input-0' manufacturer:'Microsoft Corporation' name:'5- TD-27' version:'10.0'" "Output ports" "Output port [type:'output'] id:'output-1' manufacturer:'Microsoft Corporation' name:'5- TD-27' version:'10.0'"

Test 3: dump MIDI messages to the console

I can just hard-code the input and output port IDs for now – when I get into C#, I can do something more reasonable.

Adapting example 9.4 from the Web MIDI docs very slightly, we get:

function logMidiMessage(message) {

var line = "MIDI message: "

for (var i = 0; i < event.data.length; i++) {

line += "0x" + event.data[i].toString(16) + " ";

}

console.log(line);

}

function onMIDISuccess(midiAccess) {

var input = midiAccess.inputs.get('input-0');

input.onmidimessage = logMidiMessage;

}

Now when I hit a drum, I see MIDI messages – and likewise when I make a change on the module (e.g. switching kit) that gets reported as well – so I know that SysEx messages are working.

Test 4: request the name of kit 1

Timestamp: 07:44

At this point, I need to go back to the V-Drum Explorer code and the TD-27 docs. The kit name is in the first 12 bytes of the KitCommon container, which is at the start of each Kit container. The Kit container for kit 1 starts at 0x04_00_00_00, so I just need to create a Data Request message for the 12 bytes starting at that address. I can do that just by hijacking a command in my console app, and getting it to print out the MIDI message. I need to send these bytes:

F0 41 10 00 00 00 63 11 04 00 00 00 00 00 00 0C 70 F7

That should be easy enough, adapting example 9.5 of the Web MIDI docs…

(Note of annoyance at this point: forking in JSFiddle doesn’t seem to be working properly for me. I get a new ID, but I can’t change the title in a way that shows up in “Your fiddles” properly. Ah – it looks like I need to do “fork, change title, set as base”. Not ideal, but it works.)

So I’d expect this code to work:

var output = midiAccess.outputs.get('output-1');

var requestMessage = [0xf0, 0x41, 0x10, 0x00, 0x00, 0x00, 0x63, 0x11, 0x04, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x0c, 0x70, 0xf7];

output.send(requestMessage);

But I don’t see any sign that the kit has sent back a response – and worse, if I add console.log("After send"); to the script, that doesn’t get logged either. Maybe it’s throwing an exception?

Aha – yes, there’s an exception:

Failed to execute ‘send’ on ‘MIDIOutput’: System exclusive message is not allowed at index 0 (240).

Ah, my requestMIDIAccess call wasn’t specifically requesting SysEx access. It’s interesting that it was able to receive SysEx messages even though it couldn’t send them.

After changing the call to pass { sysex: true }, I get back a MIDI message which looks like it probably contains the kit name. Hooray! Step 1 done :)

Timestamp: 08:08 (So all of this took an hour. That’s not too bad.)

Step 2: Vanilla Blazor project

Okay, within the existing VDrumExplorer solution, add a new project.

Find the Blazor project template, choose WebAssembly… and get interested by the “ASP.NET Core Hosted” option. I may want that eventually, but let’s not bother for now. (Side-thought: for the not-hosted version, I may be able to try it just by hosting the files in Google Cloud Storage. Hmmm.)

Let’s try to build and run… oh, it failed:

The "ResolveBlazorRuntimeDependencies" task failed unexpectedly. error MSB4018: System.IO.FileNotFoundException: Could not load file or assembly 'VDrumExplorer.Blazor.dll' or one of its dependencies. The system cannot find the file specified.

That’s surprising. It’s also surprising that it looks like it’s got ASP.NET Core, given that I didn’t tick the box.

There’s a Visual Studio update available… maybe that will help? Upgrading from 16.6.1 to 16.6.3…

For good measure, let’s blow away the new project in case the project template has changed in 16.6.3.

Time to make a coffee…

Try again with the new version… nope, still failing in the same way.

I wonder whether I’ve pinned the .NET Core SDK to an older version and that’s causing a problem?

Ah, yes – there’s a global.json file in Drums, and that specifies 3.1.100.

Aha! Just updating that to use 3.1.301 works. A bit of time wasted, but not too bad.

Running the app now works, including hitting a breakpoint. Time to move onto MIDI stuff.

Timestamp: 08:33

Step 3: Listing MIDI ports in Blazor

Substep 1: create a new page

Let’s create a new Razor page. I’d have thought that would be “Add -> New Item -> Razor Page” but that comes up with a .cshtml file instead of the .razor file that everything else is.

Maybe despite being in a “Pages” directory with a .razor extension, these aren’t Razor Pages but Razor Component? Looks like it.

I’m feeling I could get out of my depth really rapidly here. If I were doing this “properly” I’d now read a bunch of docs on Razor. (I’ve been to various talks on it, and used it before, but I haven’t done either for quite a while.)

The “read up on the fundamentals first” and “hack, copy, paste, experiment” approaches to learning a new technology both have their place… I just generally feel a little less comfortable with the latter. It definitely gets to some results quicker, but doesn’t provide a good foundation for doing real work.

Still, I’m firmly in experimentation territory here, so hack on.

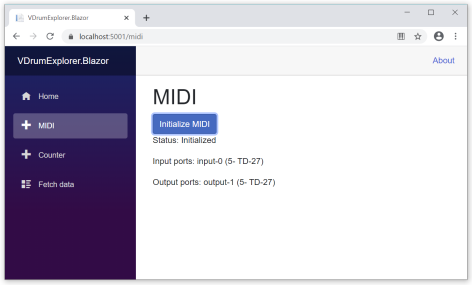

The new page has an “initialize MIDI” button, and two labels for input ports and output ports.

Add this to the nav menu, run it, and all seems well. (Eventually I may want to make this the default landing page, but that can come later.)

Time to dive into JS interop…

Substep 2: initialize MIDI

Let’s not rush to listing the ports – just initializing MIDI at all would be good. So add a status field and label, and start looking up JS interop.

I’ve heard of Blazor University before, so that’s probably a good starting point. And yes, there’s a section about JavaScript interop. It’s worryingly far down the TOC (i.e. I’m skipping an awful lot of other information to get that far) but we’ll plough on.

Calling the requestMIDIAccess function from InitializeMidi is relatively straightforward, with one caveat: I don’t know how to express the result type. I know it’s a JavaScript promise, but how do refer to that within the C# code? Let’s just use object to start with:

private async Task InitializeMidi()

{

var promise = await JSRuntime.InvokeAsync<object>("navigator.requestMIDIAccess", TimeSpan.FromSeconds(3));

}

Looking more carefully at some docuemntation, it doesn’t look like I can effectively keep a reference to a JavaScript object within the C# code – everything is basically JSON serialized/deserialized across the boundary.

That’s fairly reasonable – but it means we’ll need to write more JavaScript code, I suspect.

Plan:

- Write a bunch of JavaScript code in the Razor page. (Yes, I’d want to move it if I were doing this properly…)

- Keep a global

midivariable to keep “the initialized MIDI access” - Declare JavaScript functions for everything I need to do with MIDI, that basically proxy through the

midivariable

I’d really hoped to avoid writing any JavaScript while running Blazor, but never mind.

Plan fails on first step: we’re not meant to write scripts within Razor pages. Okay, let’s create a midi.js script and include that in index.html.

Unfortunately, the asynchrony turns out to be tricky. We really want to be able to pass a callback to the JavaScript code, but that involves creating a DotNetObjectReference and managing lifetimes. That’s slightly annoying and fiddly.

I’ll come back to that eventually, but for now I can just keep all the state in JavaScript, and ask for the status after waiting for a few seconds:

private async Task InitializeMidi()

{

await JSRuntime.InvokeAsync<object>("initializeMidi", TimeSpan.FromSeconds(3));

await Task.Delay(3000);

status = await JSRuntime.InvokeAsync<string>("getMidiStatus");

}

Result: yes, I can see that MIDI has been initialized. The C# code can fetch the status from the JavaScript.

That’s all the time I have for now – I have a meeting at 9:30. When I come back, I’ll look at making the JavaScript a bit cleaner, and writing a callback.

Timestamp: 09:25

Substep 3: use callbacks and a better library pattern

Timestamp: 10:55

Back again.

Currently my midi.js file just introduces functions into the global namespace. Let’s follow the W3C JavaScript best practices page guidance instead:

var midi = function() {

var access = null;

var status = "Uninitialized";

function initialize() {

success = function (midiAccess) {

access = midiAccess;

status = "Initialized";

};

failure = (message) => status = "Failed: " + message;

navigator.requestMIDIAccess({ sysex: true })

.then(success, failure);

}

function getStatus() {

return status;

}

return {

initialize: initialize,

getStatus: getStatus

};

}();

Is that actually any good? I really don’t know – but it’s at least good enough for now.

Next, let’s work out how to do a callback. Ideally, we’d be able to return something from the JavaScript initialize() method and await that. There’s an interesting blog post about doing just that, but it’s really long. (That’s not a criticism – it’s a great post that explains everything really well. It’s just it’s very involved.)

I suspect that a bit of hackery will allow a “simpler but less elegant” solution, which is fine by me. Let’s create a PromiseHandler class with a proxy object for JavaScript:

using Microsoft.JSInterop;

using System;

using System.Threading.Tasks;

namespace VDrumExplorer.Blazor

{

public class PromiseHandler : IDisposable

{

public DotNetObjectReference<PromiseHandler> Proxy { get; }

private readonly TaskCompletionSource<int> tcs;

public PromiseHandler()

{

Proxy = DotNetObjectReference.Create(this);

tcs = new TaskCompletionSource<int>();

}

[JSInvokable]

public void Success() =>

tcs.TrySetResult(0);

[JSInvokable]

public void Failure(string message) =>

tcs.TrySetException(new Exception(message));

public Task Task => tcs.Task;

public void Dispose() => Proxy.Dispose();

}

}

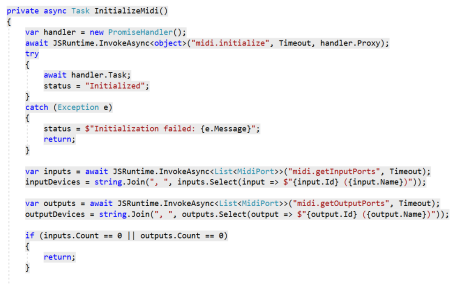

We can then create an instance of that in InitializeMidi, and pass the proxy to the JavaScript:

private async Task InitializeMidi()

{

var handler = new PromiseHandler();

await JSRuntime.InvokeAsync<object>("midi.initialize", TimeSpan.FromSeconds(3), handler.Proxy);

try

{

await handler.Task;

status = "Initialized";

}

catch (Exception e)

{

status = $"Initialization failed: {e.Message}";

}

}

The JavaScript then uses the proxy object for its promise handling:

function initialize(handler) {

success = function (midiAccess) {

access = midiAccess;

handler.invokeMethodAsync("Success");

};

failure = message => handler.invokeMethodAsync("Failure", message);

navigator.requestMIDIAccess({ sysex: true })

.then(success, failure);

}

It’s all quite explicit, but it seems to do the job, at least for now, and didn’t take too long to get working.

Timestamp: 11:26

Substep 4: listing MIDI ports

Listing ports doesn’t involve promises, but it does involve an iterator, and I’m dubious that I’ll be able to return that directly. Let’s create an array in JavaScript and copy ports into it:

function getInputPorts() {

var ret = [];

access.inputs.forEach(input => ret.push({ id: input.id, name: input.name }));

return ret;

}

(I initially tried just pushing input into the array, but that way I didn’t end up with any data – it’s not clear to me what JSON was returned across the JS/.NET boundary, but it didn’t match what I expected.)

In .NET I then just need to declare a class to receive the data:

public class MidiPort

{

[JsonPropertyName("id")]

public string Id { get; set; }

[JsonPropertyName("name")]

public string Name { get; set; }

}

And I can get the input ports, and display them via a field that’s hooked up in the Razor page:

var inputs = await JSRuntime.InvokeAsync<List<MidiPort>>("midi.getInputPorts", Timeout);

inputDevices = string.Join(", ", inputs.Select(input => $"{input.Id} ({input.Name})"));

Success!

Timestamp: 11:46 (That was surprisingly quick.)

Step 4: Retrieve the “kit 1” name in Blazor

We need two extra bits of MIDI functionality: sending and receiving data. I’m hoping that exchanging byte arrays via Blazor will be straightforward, so this should just be a matter of creating a callback and adding functions to the JavaScript to send messages and add a callback when a message is received.

Timestamp: 12:16

Okay, well it turned out that exchanging byte arrays wasn’t quite as simple as I’d hoped: I needed to base64-encode on the JS side, otherwise it was transmitted as a JSON object. Discovering that went via creating a MidiMessage class, which I might as well keep around now that I’ve got it. I can now receive messages.

Timestamp: 12:21

Blazor’s state change detection doesn’t include calls to List.Add, which is reasonable. It’s a shame it doesn’t spot ObservableCollection.Add either, though. We can fix this just by calling StateHasChanged though.

I now have a UI that can display messages. The three bits involved (as well as the simple MidiMessage class) are a callback class that delegates to an action:

public class MidiMessageHandler : IDisposable

{

public DotNetObjectReference<MidiMessageHandler> Proxy { get; }

private readonly Action<MidiMessage> handler;

public MidiMessageHandler(Action<MidiMessage> handler)

{

Proxy = DotNetObjectReference.Create(this);

this.handler = handler;

}

[JSInvokable]

public void OnMessageReceived(MidiMessage message) => handler(message);

public void Dispose() => Proxy.Dispose();

}

The JavaScript to use that:

function addMessageHandler(portId, handler) {

access.inputs.get(portId).onmidimessage = function (message) {

// We need to base64-encode the data explicitly, so let's create a new object.

var jsonMessage = { data: window.btoa(message.data), timestamp: message.timestamp };

handler.invokeMethodAsync("OnMessageReceived", jsonMessage);

};

}

And then the C# code to receive the callback, and subscribe to it:

// In InitializeMidi()

var messageHandler = new MidiMessageHandler(MessageReceived);

await JSRuntime.InvokeVoidAsync("midi.addMessageHandler", Timeout, inputs[0].Id, messageHandler.Proxy);

// Separate method for the callback - we could have used a local

// method or lambda though.

private void MessageReceived(MidiMessage message)

{

messages.Add(BitConverter.ToString(message.Data));

// Blazor doesn't "know" that the collection has changed - even if we make it an ObservableCollection

StateHasChanged();

}

Timestamp: 12:26

Now let’s try sending the SysEx message to request kit 1’s name… this should be the easy bit!

… except it doesn’t work. The log shows the following error:

Unhandled exception rendering component: Failed to execute ‘send’ on ‘MIDIOutput’: No function was found that matched the signature provided.

Maybe this is another base64-encoding issue. Let’s try explicitly base64-decoding the data in JavaScript…

Nope, same error. Let’s try hard-coding the data we want to send, using JavaScript that has worked before…

That does work, which suggests my window.atob() call isn’t behaving as expected.

Now I could use some logging here, but let’s try putting a breakpoint in JavaScript. I haven’t done that before. Hopefully it’ll open in the Chrome console.

Whoa! The breakpoint worked, but in Visual Studio instead. That’s amazing! I can see that atob(data) has returned a string, not an array.

This Stack Overflow question has a potential option. This is really horrible, but if it works, it works…

And it works. Well, sort of. The MIDI message I get back is much longer than I’d expected, and it’s longer than I get in JSFiddle. Maybe my callback wasn’t working properly before.

Timestamp 12:42

Okay, so btoa() isn’t what I want either. This Stack Overflow question goes into details, but the accepted answer uses a ton of code.

Hmmm… right-clicking on “wwwroot” gives me an option of “Add… Client-Side Library”. Let’s give that a go and see if it make both sides of the base64 problem simpler.

Timestamp: 12:59

Well it didn’t “just work”. The library was added to my wwwroot directory, and trying to use it from midi.js added an import statement at the start of midi.js… which then caused an error of:

Cannot use import statement outside a module

I guess I really need to know what a JavaScript module is, and whether midi.js should be one. Hmm. Time for lunch.

Saturday afternoon

Timestamp: 14:41

Back from lunch and a chat with my parents. Let’s have another look at this base64 library…

(Side note: Visual Studio, while I’m not doing anything at all and I don’t have any documents open, is taking up 80% of my CPU. That doesn’t seem right. Oh well.)

If I just try to import the byte-base64 script directly with a script tag then I end up with an error of:

Uncaught ReferenceError: exports is not defined

Bizarrely enough, the error message often refers to lib.ts, even if I’ve made sure there’s no Typescript library in wwwroot.

Okay, I’ve now got it to work, by the horrible hack of copying the file to base64.js in wwwroot, removing and removing everything about exports. I may investigate other libraries at some point, but fundamentally this inabilty to correctly base64 encode/decode has been the single most time-consuming and frustrating part so far. Sigh.

(Also, the result is something I’m not happy to put on GitHub, as it involves just a copy of the library file rather than using it as intended.)

Timestamp: 15:01

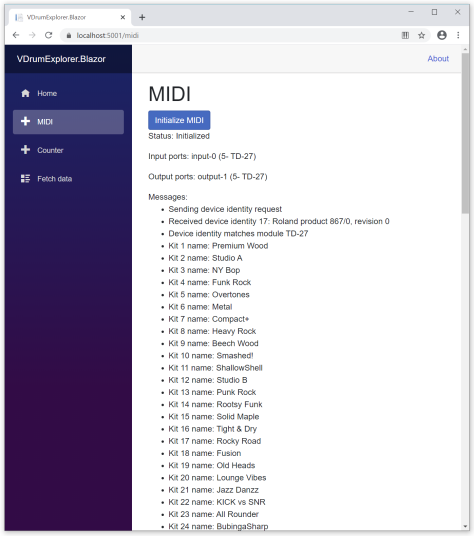

Step 5: Retrieve all kit names in Blazor

Okay, so I’ve got the not-at-all decoded kit name successfully.

Let’s try looping to get all of them, decoding as we go.

This will involve copying some of the “real” V-Drum Explorer code so I can create Data Request messages programmatically, and decode Data Set messages. While I’d love to just add a reference to VDrumExplorer.Midi, I’m definitely not there yet. (I’d need to remove the commons-midi references and replace everything I use. That’s going to be step 6, maybe…)

Timestamp: 15:41

Success! After copying quite a bit of code, everything just worked… nothing was particularly unexpected at this stage, which is deeply encouraging.

I’m going to leave it there for the day, but tomorrow I can try to change the abstraction used by V-Drum Explorer so that it can all integrate nicely…

Saturday evening

Timestamp: 17:55

Interlude: refactoring MIDI access

Okay, so it turns out I really don’t want to wiat until tomorrow. However, the next step is going to be code I genuinely want to keep, so let’s commit everything I’ve done so far to a new branch, but then go back to the branch I was on.

The aim of this step is to make the MIDI access replaceable. It doesn’t need to be “hot-replaceable” – at least not yet – so I don’t mind using a static property for “the current MIDI implementation”. I make make it more DI-friendly later on.

The two projects I’m going to change are VDrumExplorer.Model, and VDrumExplorer.Midi. Model refers to Midi at the moment, and Midi refers to the managed-midi library. The plan is to move most of the code from Midi to Model, but without any reference to managed-midi types. I’ll define a few interfaces (e.g. IMidiInput, IMidiOutput, IMidiManager) and write all the rest of the MIDI-related code to refer to those interfaces. I can then ditch VDrumExplorer.Midi, but add VDrumExplorer.Midi.ManagedMidi which will implement my Model interfaces in terms of the managed-midi library – with the hope that tomorrow I can have a Blazor implementation of the same libraries.

I have confidence that this will work reasonably well, as I’ve done the same thing for audio recording/playback devices (with an NAudio implementation project).

Let’s go for it.

Timestamp: 18:03

Okay, that went pretty much as planned. I was actually able to simplify the code a bit, which is nice. There’s potentially more refactoring to do, now that ModuleAddress, DataSegment and RolandMidiClient are in the same project – I can make RolandMidiClient.RequestDataAsync accept a ModuleAddress and return a DataSegment. That can come later though.

(Admittedly testing this found that kit 1 has an invalid value for one instrument. I’ll need to look into that later, but I don’t think it’s a new issue.)

Timestamp: 18:55

The Blazor MIDI interface implementation can wait until tomorrow – but I don’t anticipate it being tricky at all.

Sunday morning

Timestamp: 06:54

Okay, let’s do this :) My plan is:

- Remove all the code that I copied from the rest of V-Drum Explorer into the Blazor project; we shouldn’t need that now.

- Add a reference from the Blazor project to VDrumExplorer.Model

- Implement the MIDI interfaces

- Rework the code just enough to get the previous functionality working again

- Rewrite the code to not have any hard-coded module addresses, instead detecting the right schema and listing the kits for any attached (and supported) module, not just the TD-27

- Maybe publish it

Removing the code and adding the project reference are both trivial, of course. At that point, the code doesn’t compile, but I have a choice: I could get the code compiling again using the MIDI interfaces, but without implementing the interfaces, or I could implement the interface first.

Rewriting existing application code

Despite the order listed above, I’m going to rewrite the application part first, because that will clear the error list, making it easier to spot any mistakes while I am implementing the interface. The downside is that there’ll be bits of code I need to stash somewhere, because they’ll be part of the MIDI implementation eventually, but without wanting to get them right just yet.

I create a WebMidi folder for the implementation, and a scratchpad.txt file in to copy any “not required right now” code.

At this point I’m getting really annoyed with the syntax highlighting of the .razor file. I know it’s petty, but the grey background just for code is really ugly to me:

As I’m going to have to go through all the code anyway, let’s actually use “Add New Razor Page” this time, and move the code into there as I fix it up.

Two minutes later, it looks like what VS provides (at least with that option) isn’t quite what I want. What I really want is a partial class, not a code-behind for the model. It’s entirely possible that they’d be equivalent in this case, but the partial class is closer to what I have right now. This blog post tells me exactly what I need.

Timestamp: 07:10

Starting to actually perform the migration, I realise I need an ILogger. For the minute, I’ll use a NullLogger, but later I’ll want to implement a logger that adds to the page. (I already have a Log method, so this should be simple.)

Timestamp: 07:19

That was quicker than I’d expected. Of course, I don’t know whether or not it works.

Implementing the MIDI interfaces

Creating the WebMidiManager, WebMidiInput and WebMidiOutput classes shows me just how little I really need to do – and it’s all code I’ve written before, of course.

For the moment, I’m not going to worry about closing the MIDI connection on IMidiInput.Dispose() etc – we’ll just leave everything open once it’s opened. What I will do is use a single .NET-side event handler for each input port, and do event subscribe/remove handling on the .NET side. If I don’t manage that, the underlying V-Drum Explorer interface will end up getting callbacks on client instances after disposal, and other oddities. The outputs can just be reused though – they’re stateless, effectively.

Timestamp: 07:56

Okay, so that wasn’t too bad. No significant surprises, although there’s one bit of slight ugliness: my IMidiOutput.Send(MidiMessage) method is synchronous, but we’re calling into JavaScript interop which is always asynchronous. As it happens, that’s mostly okay: the Send message is meant to be effectively fire-and-forget anyway, but it does mean that if the call fails, we won’t spot it.

Let’s see if it actually works…

Nope, not yet – initialization fails:

Cannot read property ‘inputs’ of null

Oddly, a second click of the button does initialize MIDI (although it doesn’t list the kits yet). So maybe there’s a timing thing going on here. Ah yes – I’d forgotten that for initialization, I’ve got to await the initial “start the promise” call, then await the promise handler. That’s easy enough.

Okay, so that’s fixed, but we’re still not listing the kits. While I can step through in the debugger (into the Model code), it would really help if I’d got a log implementation at this point. Let’s do that quickly.

Timestamp: 08:07

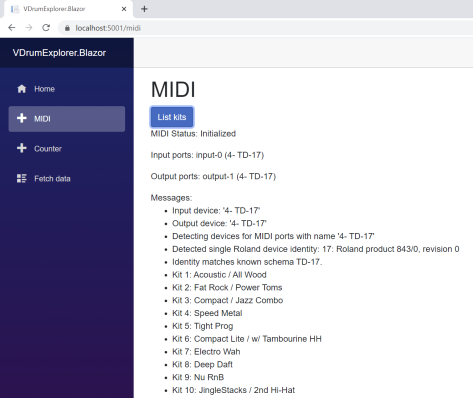

Great – I now get a nice log of how device detection is going:

- Input device: ‘5- TD-27’

- Output device: ‘5- TD-27’

- Detecting devices for MIDI ports with name ‘5- TD-27’

- No devices detected for MIDI port ‘5- TD-27’. Skipping.

- No known modules detected. Aborting

So it looks like we’re not receiving a response to our “what devices are on this port” request.

Nothing’s obviously wrong with the code via a quick inspection – let’s add some console logging in the JavaScript side to get a clearer picture.

Hmm: “Sending message to port [object Object]” doesn’t sound promising. That should be a port ID. Ah yes, simple mistake in WebMidiOutput. This line:

runtime.InvokeVoidAsync("midi.sendMessage", runtime, message.Data);

should be

runtime.InvokeVoidAsync("midi.sendMessage", port, message.Data);

It’s amazing how often my code goes wrong as soon as I can’t lean on static typing…

Fix that, and boom, it works!

Generalizing the application code

Timestamp: 08:16

So now I can list the TD-27 kits, but it won’t list anything if I’ve got my TD-17 connected instead… and I’ve got fairly nasty code computing the module addresses to fetch. Let’s see how much easier I can make this now that I’ve got the full power of the Model project to play with…

Timestamp: 08:21

It turns out it’s really easy – but very inefficient. I don’t have any public information in the schema about which field container stores the kit name. I can load all the data for one kit at a time, and retrieve the formatted kit name for that loaded data, but that involves loading way more information than I really need.

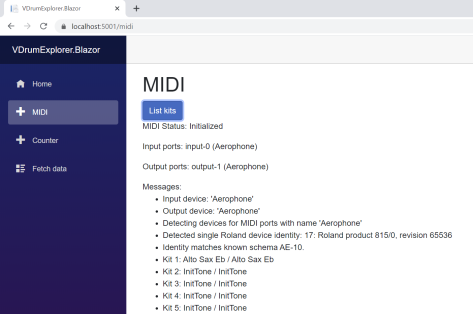

So that’s not ideal – but it worked first time. First I listed the kits on my TD-27, and that worked as before. Turn that off and turn on the TD-17, rerun, and boom:

It even worked with my Aerophone, which I only received last week. (They’re mostly “InitTone” as the Aerophone splits user kits from preset kits, and the user kits aren’t populated to start with. The name is repeated as there’s no “kit subname” on the Aerophone, and I haven’t yet changed the code to handle that. But hey, it works…)

That’s enough for this morning, certainly. I hadn’t honestly expected the integration to go this quickly.

This afternoon I’ll investigate hosting options, and try to put the code up for others to test…

Timestamp: 08:54

After just tidying up this blog post a bit, I’ve decided I definitely want to include the code on GitHub, and publish the result online. That will mean working out what to do with the base64 library (which is at least MIT-licensed, so that shouldn’t be too bad) but this will be a fabulous thing to show in the talks I give about V-Drum Explorer. And everyone can laugh at my JavaScript, of course.

Sunday afternoon

Publishing as a web site

Timestamp: 13:12

Running dotnet publish -c Release in the Blazor directory creates output that looks like I should be able to serve it statically, which is what I’d hoped having unchecked the “ASP.NET Core Hosting” box on project creation.

One simple way of serving static content is to use Google Cloud Storage, uploading all the files to a bucket and then configuring the bucket appropriately. Let’s give it a go.

The plan is to basically follow the tutorial, but once I’ve got a simple index.html file working, upload the Blazor application. I already have HTTPS load balancing with Google Cloud, and the jonskeet.uk domain is hosted in Google Domains, so it should all be straightforward.

I won’t take you through all the steps I went through, because the tutorial does a good job of that – but the sample page is up and working, served over HTTPS with a new Google-managed SSL certificate.

Timestamp: 13:37

Time to upload the Blazor app. It’s not in a brilliant state at the moment – once this step is done I’ll want to get rid of the “counter” sample etc, but that can come later. I’m somewhat-expecting to have to edit MIME types as well, but we’ll see.

In the Google Cloud Storage browser, let’s just upload all the files – yup, it works. Admittedly it’s slightly irritating that I had to upload each of the directories separately – just uploading wwwroot would create a new wwwroot directory. I expect that using gsutil from the command line will make this easier in the future.

But then… it just worked!

Timestamp: 13:51 (the previous step only took a few minutes at the computer, but I was also chasing our cats away from the frogs they were hunting in the garden)

Tidying up the Blazor app

The point of the site is really just a single page. We don’t need the navbar etc.

Timestamp: 14:12

Okay, that looks a lot better :)

Speeding up kit name access

If folks are going to be using this though, I really want to speed up the kit loading. Let’s see how hard it is to do that – it should all be in Model code.

Timestamp: 14:20

Done! 8 minutes to implement the new functionality. (A bit less, actually, due to typing up what I was going to do.)

The point of noting that isn’t to brag – it’s to emphasize that having performed the integration with the main Model code (which I’m much more comfortable in) I can develop really quickly. Doing the same thing in either JavaScript or in the Blazor code would have been much less pleasant.

Republish

Let’s try that gsutil command I was mentioning earlier:

- Delete everything in the Storage bucket

- Delete the previous release build

- Publish again with

dotnet publish -c Release cd bin/Release/netstandard2.1/publishgsutil -m cp -r . gs://vdrumexplorer-web

The last command explained a bit more:

– gustil: invoke the gsutil tool

– -m: perform operations in parallel

– cp: copy

– -r: recursively

– .: source directory

– gs://vdrumexplorer-web: target bucket

Hooray – that’s much simpler than doing it through the web interface (useful as that is, in general).

Load balancer updating

My load balancer keeps on losing the configuration for the backend bucket and certificate. I strongly suspect that’s because it was created in Kubernetes engine. What I should actually do is update the k8s configuraiton and then let that flow.

Ah, it turns out that the k8s ingress doesn’t currently support a Storage Bucket backend, so I had to create a new load balancer. (While I could have served over HTTP without a load balancer, in 2020 anything without HTTPS support feels pretty ropy.)

Of course, load balancers cost money – I may not keep this up forever, just for the sake of a single demo app. But I’m sure I can afford it for a while, and it could be useful for other static sites too.

The other option is to serve the application from my k8s cluster – easy enough to do, just a matter of adding a service.

Conclusion

Okay, I’m done. This has been an amazing weekend – I’m thrilled with where I ended up. If you’ve got a suitable Roland instrument, you can try it for yourself at https://vdrumexplorer.jonskeet.uk.

The code isn’t on GitHub just yet, but I expect it to be within a week (in the normal place).

(Edited) I was initially slightly disappointed that it didn’t seem to work on my phone. I’m sure what happened when I tried innitially (and I don’t know why it’s still claiming the connection is insecure), but I’ve now managed to get the site working on my phone, connecting over Bluetooth to my TD-27. Running .NET code talking to Javascript talking MIDI over Bluetooth to list the contents of my drum module… it really just feels like it shouldn’t work. But it does.

The most annoying aspect of all of this was definitely the base64 issue… firstly that JavaScript doesn’t come with a reliable base64 implementation (for the situation I’m in, anyway) and secondly that adding a client library was rather more fraught than I’d have expected. I’m sure it’s all doable, but beyond my level of expertise.

Overall, I’ve been very impressed with Blazor, and I’ll definitely resurrect the Noda Time Blazor app for time zone conversions that I was working on a while ago.